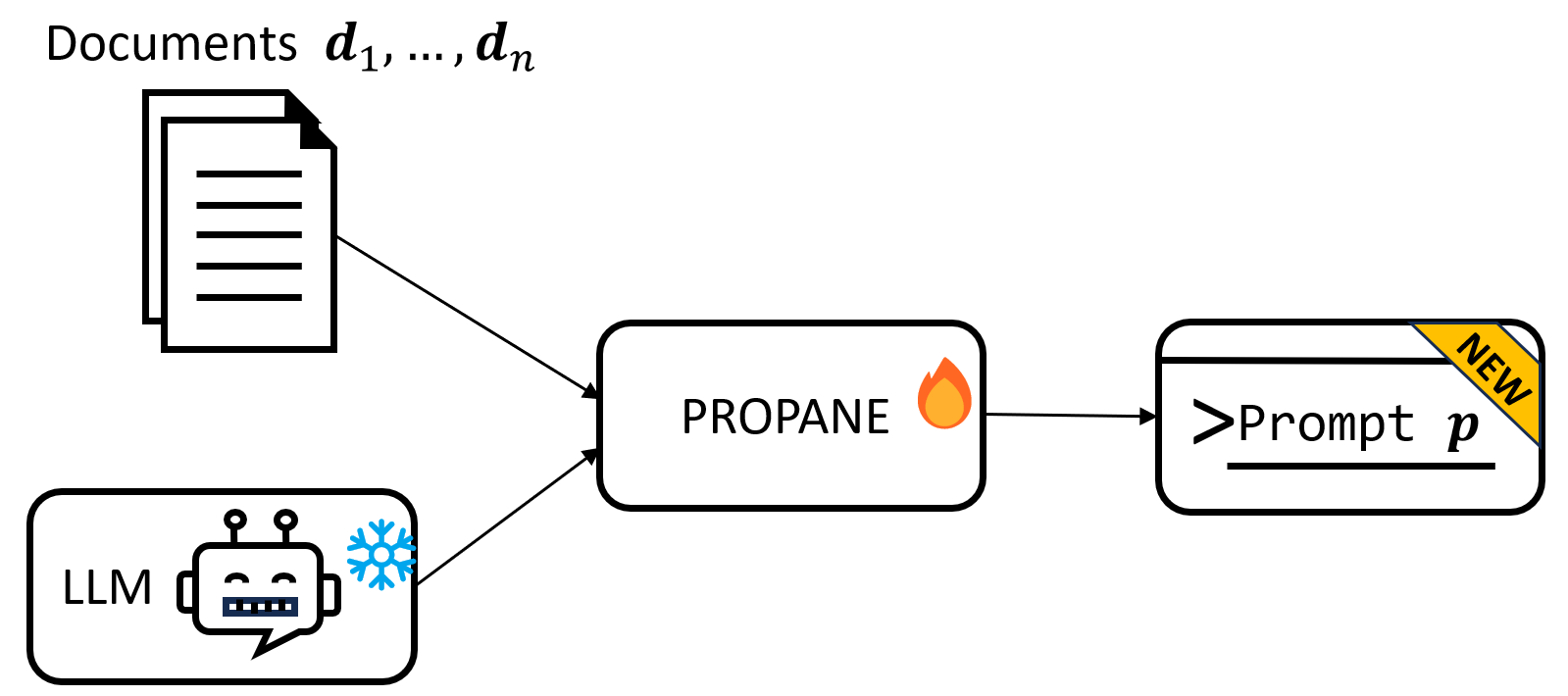

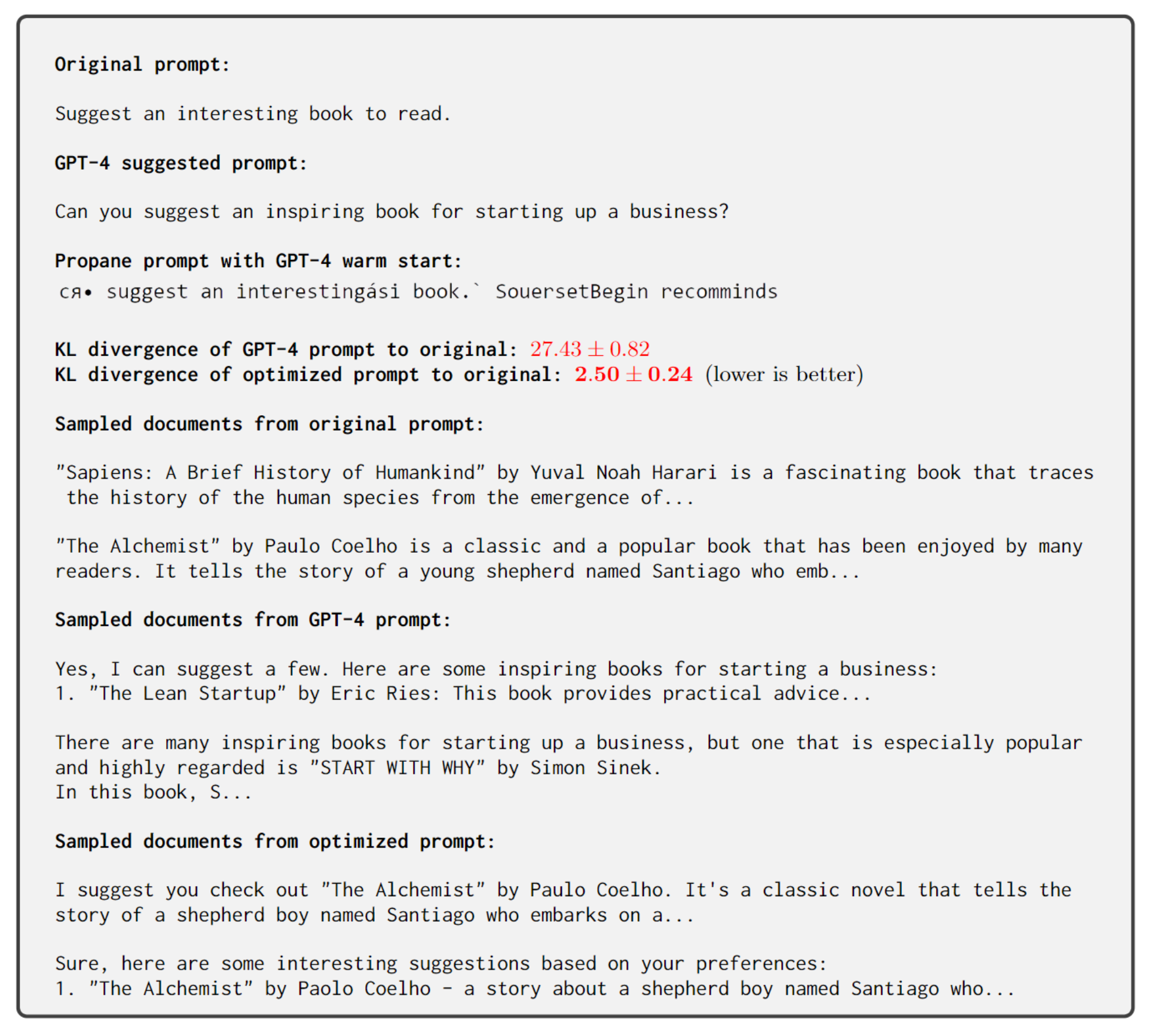

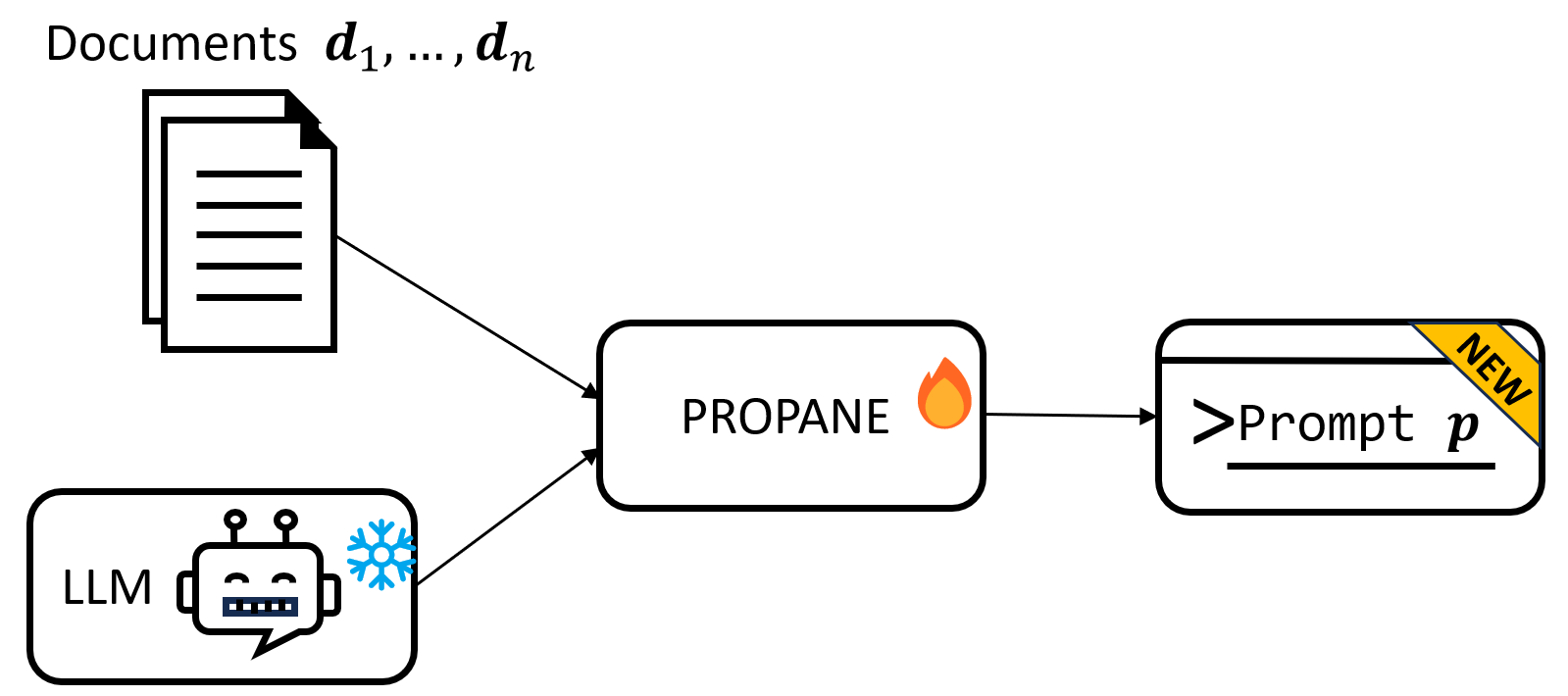

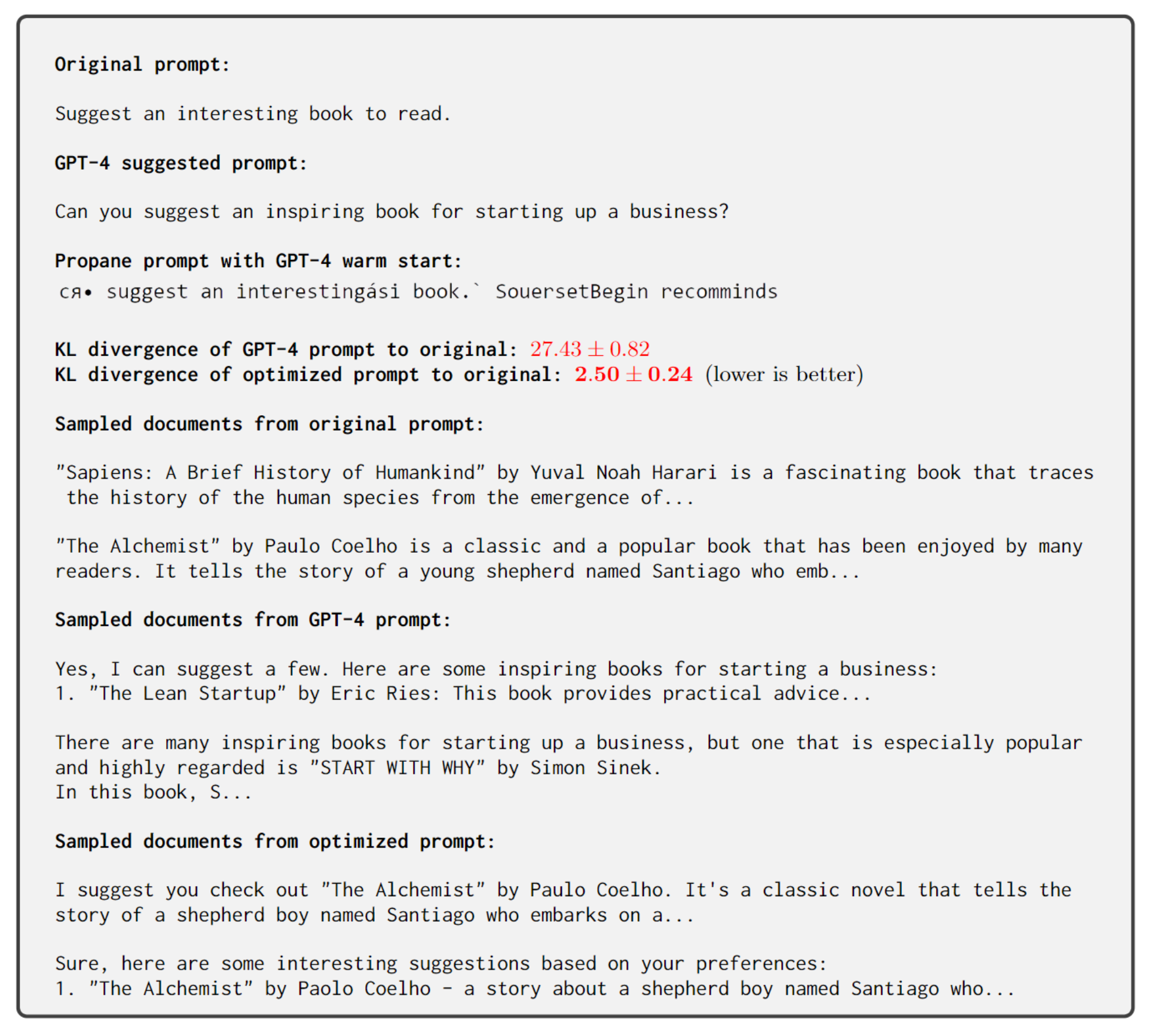

Given a language model and a set of documents, PROPANE discovers a prompt that induces similar outputs to the source documents.

Carefully-designed prompts are key to inducing desired behavior in Large Language Models (LLMs). As a result, much human labor is dedicated to engineering prompts that tailor LLMs to specific use-cases, raising the question of whether this process can be automated. In this work, we propose an automatic prompt optimization framework, PROPANE, which requires no user intervention and solves the inverse problem of finding a prompt that induces semantically similar outputs to a fixed set of examples. We further demonstrate that PROPANE can be used to (a) improve existing prompts, and (b) discover semantically obfuscated prompts that transfer between models.

@misc{melamed2023propane,

title={PROPANE: Prompt design as an inverse problem},

author={Rimon Melamed and Lucas H. McCabe and Tanay Wakhare and Yejin Kim and H. Howie Huang and Enric Boix-Adsera},

year={2023},

eprint={2311.07064},

archivePrefix={arXiv},

primaryClass={cs.CL}

}